How does my cell phone know what I'm taking?

I was in the garden earlier + snapped the last crumbs of snow from today, there was something on my cell phone about "snow," then next to flowers, it said "flower" or green stuff, it said "plant". When I took the sunset, it said "sunrise / sunset" + what was written there was "text"

Then there's also: "Create adapted landscape images with intelligently adjusted camera settings", "Increase the quality of text images" etc.

So how does it work that it says there? And is that just a hint or do I have to adjust something?

It's an almost new phone! My predecessor (11.5 years old) also took beautiful photos, panoramas and films, but it didn't have any such functions!

Please explain to a layperson! LOL

Oh, it's a "Huawei P30 lite"!

I wish all Girlfriend's, especially those who answer here, a happy and healthy new week! : :-)

The app recognizes snow, flowers and various animals and then classifies them

It's amazing what the devices can do these days.

Close-ups are not bad here, but would like to get closer!

Thank you, I wish you a happy and healthy new week! : :-)

That's just this A.I. Mode (artificial intelligence), which you can also switch off (in the camera app above at AI).

That means nothing else than that the software was previously trained to recognize "this is a plant / person / sky" through similar images. And accordingly then the image is slightly processed, i.e. With plants e.g. The color tones are more saturated, with people it shows the skin tones slightly softened and with the sky the blue tones are strongly saturated etc.

It's nice, but sometimes you might prefer a photo as it looks in reality and not with totally exaggerated colors.

The whole thing is a kind of artificial intelligence.

The camera app virtually scans the area that is captured by the lens and compares this with a database of information and thus recognizes what you want to photograph.

Your mobile phone then sets the appropriate parameters so that the picture works as well as possible.

If Google (or whoever) has a million pictures of trees and people, their programs can compare them with your picture and determine whether it is a person or a tree.

There are now algorithms that are able to learn patterns from large amounts of data - in this case image categories. One variant is that people indicate to which categories the pictures belong and the algorithm is able to assign unknown pictures based on the given pictures in the future.

Without going into the technical implementation now, I'll try to describe it like this:

In very old buildings you can often see on the ground where people have been most of the time, as the ground there's more worn. Due to the very large number of people, the floor has, so to speak, learned the movement pattern of people for a long time.

However, these algorithms go well beyond that. What has just been described learned a single quality. The algorithms that learn to recognize images, learn many hundreds to thousands of properties.

In the same way, they also learn the relationships between the properties / patterns. For example, if you had an overlap between "There were a lot of people here" and "There was a ladies' room at this point", one can conclude that "There were a lot of women here"

What is special about these algorithms, however, is that you don't have to tell them which properties they can use to categorize the data, which means that any abstract data can be examined for patterns (people couldn't meaningfully assign the images to categories based on the color intensities of pixels alone.

They learn it by themselves, solely by being told what they did right and what they did wrong. It has often been shown that these algorithms sometimes pay attention to completely different properties than humans and sometimes assign completely disjointed images to the same category for us, even though the algorithms are actually quite reliable in the assignment.

A bit more technical you could describe it like this:

The algorithm receives data as input, e.g. The pixels of the image and uses a mathematical, multi-dimensional function with a large number of randomly initialized factors to calculate a value that should result in which category the input belongs to.

In the beginning, of course, the result of the equation is wrong almost exactly 50% of the time.

So you start to let the algorithm guess (calculate the equation with the chosen factors and the chosen input). Then you compare what is advised with what it should be (people have to determine the target result in advance, at least with the simpler methods).

A so-called loss function then evaluates how good the algorithm was (how close it was to the correct category). Then the factors are adjusted accordingly. Factors that were more responsible for the result are adjusted more.

In the long term, the factors approach their best possible value, the value with which the algorithm is most often correct. Depending on how you set up the function, the algorithm can be of different quality.

Thanks!

Madness, how it works!

Thank you, I wish you a happy and healthy new week! : :-)

Thanks!

The technology is amazing!

Are beautiful photos, nothing exaggerated I think!

Night photos are nothing so very good despite the setting!

Try to add a few more photos if you can!

The cell phone also takes better close-up / macro photos, like the old cell phone + the Girlfriend winning camera.

Thank you, I wish you a happy and healthy new week! : :-)

As a computer science student, I actually wrote something myself.

I drew a few thousand pictures from the internet and then wrote a program to be able to recognize cars, motorcycles and pedestrians. Worked pretty well.

The theory behind it is very complex, but if you understand the basics you can "recreate" something like that.

Google etc. Have of course much more blatant stuff.

Thanks!

It's amazing what technology can do today!

Are already good photos. The dealer really gave me something good!

Thank you, I wish you a happy and healthy new week! : :-)

Well, for the first paragraph, the backlight / backlight is probably broken. You can mostly replace them for 30 euro (material costs, working time depends on it) or something.

Thanks!

I read it, but read it again tomorrow as it is late now. But is understandable.

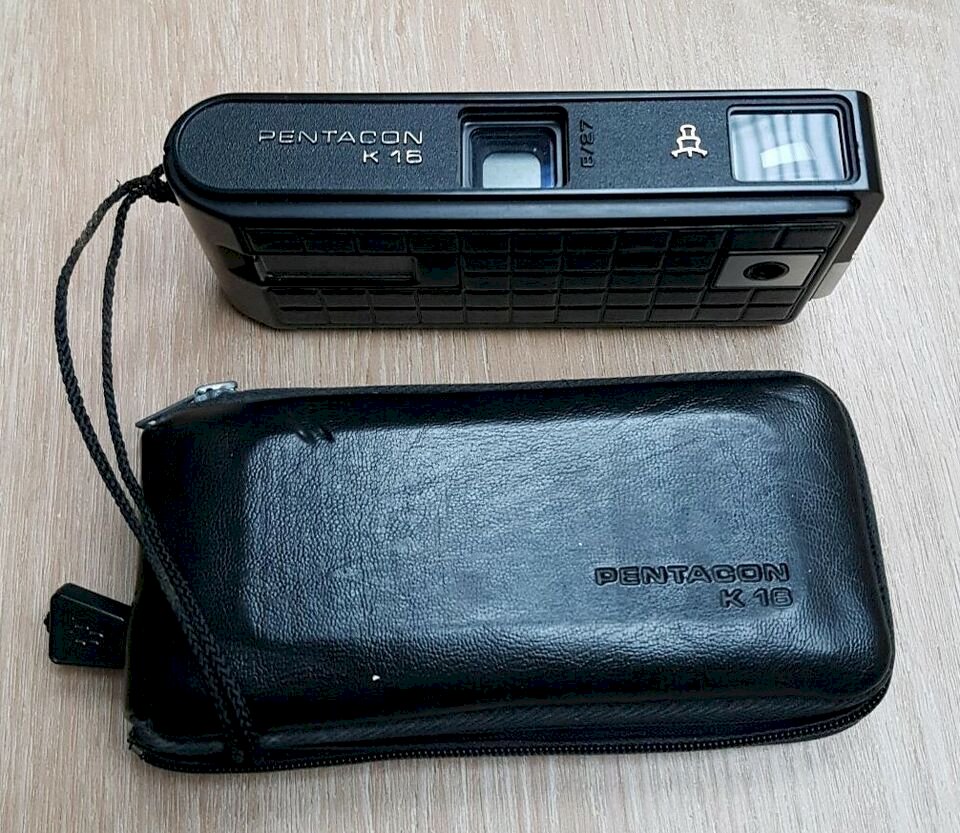

But when I think about it in the past you had to stand still for a photo, then there were already films, with my first one. If I also had to fiddle around with films, then I had a pocket camera:

Dad had one like that (or something like that?) + Made slides with it:

https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcQA3_7D098Kb2G-2tXyPK4CeMCFDeRqruGWvg&usqp=CAU

Thank you, I wish you a happy and healthy new week! : :-)

Would have saved the money for a new lapi! It's too late now! GN8! : :-)

Thank you, everyone deserves it, but I only have 1 *!

I wish jort, a nice and healthy weekend coming soon! : :-)